An artificial intelligence (AI) algorithm distinguished between benign and malignant masses on breast ultrasound at a high level of accuracy -- perhaps even exceeding the diagnostic performance of less-experienced radiologists, according to research published online March 19 in the Japanese Journal of Radiology.

Dr. Tomoyuki Fujioka and colleagues from Tokyo Medical and Dental University in Japan trained a deep-learning model to characterize breast masses on ultrasound and found that its sensitivity, specificity, and accuracy all exceeded 92% in testing. What's more, it performed better than a radiologist with four years of breast imaging experience and comparably to two more-experienced radiologists.

"Our results imply that this [convolutional neural network] model could be helpful to radiologists to diagnose breast masses, especially those with only a few years of clinical experience," the authors wrote.

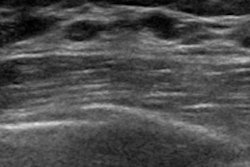

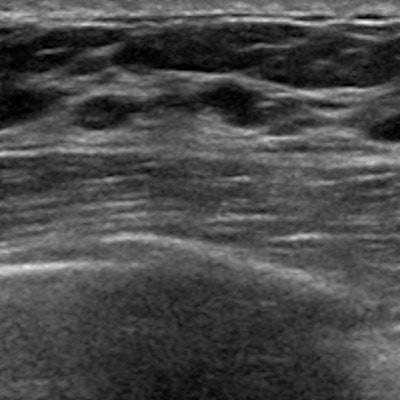

Although breast ultrasound is useful for differentiating masses, the morphologic features of benign and malignant lesions have substantial overlap. Interpretation of these images depends greatly on radiologist experience, leading to the potential for significant interobserver variability, according to the researchers.

As a result, they sought to train a convolutional neural network (CNN) to distinguish between benign and malignant breast masses on ultrasound studies. The researchers retrospectively collected 480 images of 97 benign masses and 467 images of 143 malignant masses for use in training. The deep-learning model, which was developed using the GoogLeNet CNN architecture, was then tested on a set of 120 images of patients with 48 benign masses and 72 malignant masses.

In addition, three radiologists -- with four, eight, and 20 years of breast imaging experience, respectively -- interpreted the test cases.

| AI performance for characterizing masses on breast ultrasound | ||

| Radiologists (range) | CNN model | |

| Sensitivity | 58.3%-91.7% | 95.8% |

| Specificity | 60.4%-77.1% | 87.5% |

| Accuracy | 65.8%-79.2% | 92.5% |

| Diagnostic performance (area under the curve) | 0.728-0.845 | 0.913 |

The CNN's diagnostic performance was statistically equal to or better than the least-experienced radiologist (p = 0.01) and comparable to the other two radiologists (p = 0.08 and 0.14). Delving further into the results, the researchers observed that radiologists had a higher interobserver agreement with each other than they did with the deep-learning model.

"We must assume that the CNN models and radiologists find and evaluate completely different aspects of the images," they wrote.