A breast ultrasound artificial intelligence (AI) algorithm was able to differentiate breast masses at a high level of accuracy by combining analysis of B-mode and color Doppler images, according to research published online January 31 in European Radiology. It even yielded comparable performance to experienced radiologists.

A research team led by Xuejun Qian, PhD, of the University of Southern California and Bo Zhang, PhD, of Central South University in Hunan, China, found that their deep-learning algorithm had substantial agreement with radiologists for providing BI-RADS categorization. It also yielded high sensitivity and specificity.

"The decisions determined by the model and quantitative measurements of each descriptive category can potentially help radiologists to optimize clinical decision-making," the authors wrote.

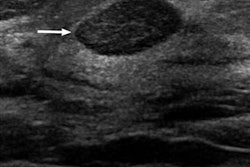

Breast ultrasound interpretation has been characterized by variable inter- and intrareader reproducibility, with higher false positives than other imaging tests, according to the researchers. Seeking to develop an automated breast classification system that could improve consistency and performance, they gathered a training set of 103,212 breast masses and a validation set of 2,748 independent breast masses at two Chinese hospitals between August 2014 and March 2017. They also assembled a test set of biopsy-proven 605 breast masses classified as BI-RADS 2 to 5 from March 2017 to September 2017.

Next, the researchers trained two convolutional neural networks: one based just on B-mode images and the other based on both B-mode and color Doppler images. On the validation cases, the model based on both B-mode and color Doppler image analysis had a higher level of agreement (kappa = 0.73) with the original interpreting radiologists for BI-RADS categorization than the network based only on B-mode images (kappa = 0.58). The difference was statistically significant (p < 0.001).

They then evaluated the performance of both models on the test set of 605 masses.

| Performance of neural networks for classifying breast masses on test set | ||

| Model based on B-mode images | Model based on both B-mode and color Doppler images | |

| Sensitivity | 96.8% | 97.1% |

| Specificity | 75.5% | 88.7% |

| Accuracy | 85.3% | 92.6% |

| Area under the curve (AUC) | 0.956 | 0.982 |

The researchers noted that the addition of color Doppler information improved the algorithm's specificity and accuracy on a statistically significant basis (p < 0.001). The small increase in sensitivity was not statistically significant, however.

"Overall, Doppler information should be incorporated into breast [ultrasound] examination protocols for breast masses, and the use of such a dual-modal system may improve cancer diagnostics," the authors wrote.

The researchers also had 10 radiologists with three to 20 years of breast imaging experience assess the 605 masses in the test set. Nine of the 10 radiologists had a sensitivity that ranged from 87.8% to 94.6%, while the last radiologist had 64.7% sensitivity. That same radiologist had the highest specificity (98.5%), with the remaining participants producing sensitivity ranging from 84.1% to 91.7%. Overall, the 10 readers produced an AUC of 0.948.

"These results indicate that the performance of model 2 reached the levels of the human experts," the authors concluded.