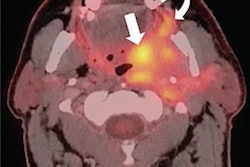

BOSTON -- A unified deep-learning framework can identify and accurately describe parotid gland tumors (PGTs) on CT exams, a study presented October 21 at the Conference on Machine Intelligence in Medical Imaging (CMIMI) found.

Researchers led by Wei Shao, PhD, from the University of California, Irvine observed high accuracy from a multi-stage pipeline combining a first-pass screening model with a subsequent focused segmentation model, which could help identify PGTs missed in real clinical workflows. The findings were shared at the Society for Imaging Informatics in Medicine (SIIM)-hosted meeting.

“We built an automatic screening pipeline that can accurately discover potential parotid tumor patients on routine CT imaging,” Shao said.

PGTs are the most common salivary gland tumors, with many being found incidentally on CT exams. While the incidence rate for parotid tumors is one to three per 100,000 people, one in five PGTs are malignant. Shao said with increased imaging volumes, many PGTs are overlooked by radiologists who prioritize acute pathology.

“Just because it’s not in the radiology report does not mean it’s a true-negative [case]” Shao said.

Shao presented his team’s combined deep learning approach for opportunistic PGT detection of CT. The researchers focused on improving complementary objectives for tumor screening and segmentation.

The researchers aggregated a retrospective dataset of 11,449 consecutive non-contrast head CT exams from two academic centers. An expert neuroradiologist identified and annotated PGTs greater than 10 mm from selected radiology and histopathology reports. Final analysis included 219 PGTs.

The team employed a multistage deep-learning pipeline to optimize its model for PGT detection. Here, an initial model localizes each parotid gland. At the same time, a single 3D U-Net model implements segmentation and screening tasks. The researchers calibrated thresholds for positive voxel predictions to convert segmentation outputs into binary screening results.

The best screening model from the combined approach achieved high marks for per-exam specificity, sensitivity, accuracy, and other measures. The best segmentation model, meanwhile, achieved a moderate Dice score.

| Performance of combined deep-learning models in identifying PGTs | |

|---|---|

| Measure (for screening model unless noted otherwise) | Value |

| Specificity | 94.7% |

| Sensitivity | 71.9% |

| Positive predictive value | 85.8% |

| Negative predictive value | 87.8% |

| Accuracy | 87.2% |

| Dice score (segmentation model) | 0.71 |

Of the positive predictions, six tumors were missed by the original interpreting physician. The team noted that in general, cross-entropy outperformed focal loss for segmentation, while the opposite went for screening due to improved specificity and lower false positives.

Finally, the team found that using negative training examples led to decreased tumor Dice scores while reducing false positives for the screening task.

Shao said that the results point toward the approach’s ability to facilitate downstream work for physicians.

Check out AuntMinnie.com's coverage of CMIMI 2024 here.