BOSTON -- AI continues to rise in potential for radiology, and department leaders should abide by best practices for its deployment, according to an October 21 presentation at the Conference on Machine Intelligence in Medical Imaging (CMIMI).

In her talk at the Society for Imaging Informatics in Medicine's (SIIM)-hosted meeting, Nina Kottler, MD, from Radiology Partners, outlined how radiology leaders can navigate the validation and clinical deployment stages.

“Until we get to a place where we have best practices … it’s going to hard to get to that high-growth adoption, and that’s what we’re looking for,” said Kottler, who also holds membership positions with the Society of Imaging Informatics in Medicine (SIIM), the RSNA, the American College of Radiology (ACR), and RADequal.

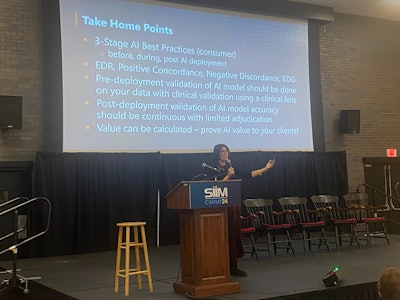

Nina Kottler, MD, from Radiology Partners talks about best practices radiology departments can implement when measuring whether an AI model can add value and improve imaging interpretation in clinical practice at CMIMI 2024.

Nina Kottler, MD, from Radiology Partners talks about best practices radiology departments can implement when measuring whether an AI model can add value and improve imaging interpretation in clinical practice at CMIMI 2024.

It’s no secret that AI’s potential continues to be explored by radiologists, from a role as a second imaging reader to generating medical reports based on input data.

Kottler said that for successful best practices, radiology departments looking to deploy AI models into their practices should further validate models on their own datasets predeployment, enact continuous monitoring and education, and find ways to prove the value of AI models in clinics.

Model validation

While the U.S. Food and Drug Administration (FDA) has its criteria for validating AI models, Kottler said that for successful AI clinical deployment, hospital systems should validate models on their own patient data. This is because AI looks through different lenses between datasets and thus may not be generalizable.

“You can’t just rely on the FDA data,” Kottler said. “You need to run [AI models] on your own data and get your own accuracy.”

While measures like sensitivity, specificity, and prevalence may change between different datasets, radiologists should look at positive predictive value (PPV) in this area, Kottler said. If the PPV from the AI model is on par with or higher than that of the same model in the FDA dataset, then that model may be successful in practice.

For effective model validation, Kottler outlined a five-step plan. This includes measuring performance statistics, measuring AI-enhanced detection, examining “wow” cases in which AI by itself accurately detects abnormal findings, categorizing false-positive and false-negative cases, and summarizing and deciding whether the AI model would be successful in practice.

AI-enhanced detection considers how many cases an AI model accurately detects that a radiologist or radiology team may fail to find. For example, if the total number of accurate abnormal detections between a radiologist and an AI model in a dataset is 50, but radiologists found 40 of them, then the remaining 10 cases found by AI alone can be included in the AI-enhanced detection rate. In this case, the detection rate would be 25%, a high enhancement number.

Continuous validation and monitoring

Successful deployment and integration of AI models into clinical practice isn’t a “set it and forget it” situation, said Kottler. This is because AI is susceptible to a phenomenon called data drift, in which the statistical properties of data used for AI validation change over time. This results in decreased AI accuracy over time.

Kottler said that continuous monitoring and validation are needed to combat data drift and bias. Calculations that could help set “alarms” for decreased accuracy over time include the use of positive concordance and negative discordance. Here, AI results are compared with radiologist reports fed into a large language model. The bigger the difference, the more discordant the results are.

Also, subclass analysis can be used to evaluate differences between AI and large language model reports. This can evaluate characteristics such as radiologist features, patient demographics, scanner information, protocol details, and imaging locations.

Departments from there can set up alarms tracking discordance over time, like a 10% drop in positive concordance compared to the historical average of an AI model’s accuracy.

Proving value

Proving value for deployed AI models can be a lengthy process. However, there are calculations that can be used to assess a model’s value in the workplace.

Kottler said this is where enhanced detection rate (EDR) comes into play. This is the theoretical maximum value of clinical benefit. It assumes that every time an AI model is correct, the radiologist will accept this result and not be swayed by incorrect AI results.

This measure analyzes how much AI models can add to imaging interpretation and helps manage the inherent bias between radiologists and AI systems, Kottler said.

Data scientists should calculate EDR prior to clinical deployment and calculate observed, unrealized EDR after deployment. By subtracting EDR after deployment from EDR before deployment, radiologists can find the enhanced detection gained by the AI model.

“Hopefully, this gives you a bit of a framework, so that when you’re looking to deploy AI, you know the things you want it to be looking at. And it’s great to think about it before, during, and after deployment,” Kottler said.

Check out AuntMinnie.com's coverage of CMIMI 2024 here.