Installing an automated radiologist peer-review system that is integrated with a PACS network has many benefits, such as improved workflow and data tracking. But radiology departments may still face challenges in getting radiologists to actually use the system.

Radiologists at Penn State Hershey Medical Center hoped to increase radiologist compliance with peer review by implementing an automated version of eRadPeer, the American College of Radiology's (ACR) peer-review program. How well the effort succeeded was the subject of a poster presentation by Dr. Jonelle Petscavage, assistant director of medical image management and assistant professor of radiology, at last month's Society for Imaging Informatics in Medicine (SIIM) meeting.

While radiologists may not enjoy peer review, the benefits of an automated version of eRadPeer outweigh the drawbacks, Petscavage concluded. But departments may need to resort to more than just process automation to get radiologists to fully embrace peer review.

The importance of peer review

Implementing and managing a peer-review program has become a necessity for radiologists. It's an ACR requirement for hospital accreditation.

The ACR made the process easier when it designed the RadPeer system, which originally started as paper cards that imaging facilities would complete and mail to the organization. In 2005, an electronic version was offered, and the card system was phased out by 2011. To differentiate the two systems during that six-year period, the electronic version was called eRadPeer.

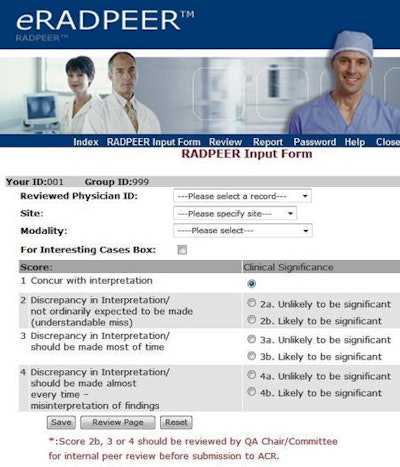

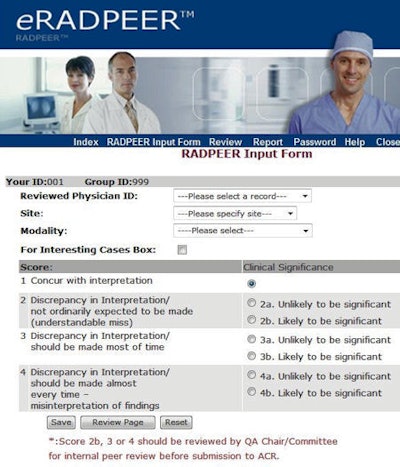

Radiologists using RadPeer score the interpretations of their peers using a standardized four-point rating scale, in which 1 means a correct diagnosis and 4 means an obvious misinterpretation. Cases can be contained in a database by a radiology department for internal review and storage.

The eRadPeer system requires that individual radiologists log in to the ACR database, with unique identifiers provided for the department or radiology group as a whole and for each participating radiologist. After a radiologist has selected a study for review -- generally a prior study associated with a current one the radiologist is interpreting -- the radiologist reinterprets the study. He or she then enters the name of the radiologist whose study is being reviewed, the site, the type of modality, the score, and comments in a free-text box if the exam is designated as an interesting case.

|

| Screenshot from the eRadPeer system. Image courtesy of the ACR. |

Reports provide summary statistics and comparisons for each participating physician by modality, summary data for the group by modality, and data summed across all RadPeer participants. A radiology chairman or other designated individual can access the reports online at any time.

Although getting rid of paper has produced many benefits, the electronic version of RadPeer also has potential issues, as Hershey's radiology department discovered after implementing the system in 2009. There are concerns about the randomness of study selection and the ability to incorporate all imaging modalities. Reviewers had to manually institute a peer review when they believed they had identified a suitable case to review.

Additionally, peer review had to be entered into a database that was separate from the PACS. Also, depending upon a department's protocol, the radiologist who performed the peer review might be responsible for advising a colleague of a negative review.

Automating eRadPeer

To solve these problems, Hershey's radiology department automated their eRadPeer system and integrated it with the facility's PACS network during the summer of 2010. By adding plug-in software (Primordial Design) for its PACS (ImageCast, GE Healthcare) and purchasing a separate server -- the RIS alternative report server -- loaded with the two most recent years of radiology reports, peer review became an integrated part of the department's workflow.

The two most important benefits of the automated eRadPeer system are the elimination of a separate sign-on, and the reduction of bias by the radiologists in selecting cases to review, Petscavage said.

With the integrated system, when a radiologist opens an exam with a prior imaging study of the same subspecialty performed within the past two years that has not already been peer reviewed, a pop-up window appears within one second and prompts the radiologist to complete a peer review. The peer-review window shows the text of the prior report, automatically populates a field with the name of the radiologist who authored the report, and includes a list of possible ratings and a field box to add comments. Upon completion, the review is transmitted to the ACR.

The system automatically prompts radiologists on weekdays to complete three reviews a day. A radiologist may decline to perform a review, but he or she will continue to be prompted until the daily quota is filled. This does not prevent intentional bias by a radiologist regarding which studies to review, but it does eliminate unintentional bias.

If a peer review has been rated with a score of 2 to 4, the reviewed radiologist is automatically sent a message with the information, but the identity of the reviewing radiologist is kept anonymous. The system can be configured to send alerts, such as for a score of 3 to 4, to the chairman or to the person responsible for quality assurance.

The system interfaces with the RIS and PACS and is updated every evening.

The study and the surveys

It seemed logical that a fully integrated peer-review system would improve workflow and be better accepted, but Petscavage and colleagues decided to document the benefits of an automated system.

"Little information is available or has been published describing the differences in radiologists' perception and their degree of compliance with integrated systems," she said. "We wanted to learn what our colleagues thought of it, and what impact, if any, it would have."

The research team designed a 10-question online survey that was distributed a month before the automation. The radiologists received the survey with a five-point Likert scale (agree/disagree/neutral) asking them questions about whether the eRadPeer system was easy to use or disrupted daily workflow, whether an anonymous system would reduce concerns, and if automated prompts might increase participation in peer review. Ten months after the automated system had been implemented, another survey was disseminated.

"The impact of the workflow efficiencies added by the automated peer-review system was very obvious," Petscavage said. "Whereas more than half of the respondents [53%] had felt the eRadPeer system was time-consuming and two-thirds [67%] felt it was disruptive, only 11% felt that way for both categories after it was automated."

Other benefits reported by more than two-thirds of the respondents were that peer review was easier to perform with the automated version, especially because it allowed a radiologist to fulfill a quota over time instead of in one sitting, and that it was more efficient with respect to workflow. Radiologists also liked the random nature of case selection and that their identity was kept anonymous.

However, the automated system was criticized by 78% for its inconsistency in filling in data fields with the name of the original reporting radiologist, a problem believed to be a computer glitch. Almost two-thirds did not like that the peer-review window was displayed behind the report dictation window. Subcategorizing the 2 through 4 ratings with two more specific ratings caused confusion for 22%, and 17% didn't like to be prompted to review cases outside of their own specialties.

One surprise: Lower compliance

One surprising finding was that after the automated peer-review system was implemented, the number of nonparticipating radiologists rose from 19% to 20%. In the fall of 2009, compliance was 91%, compared with 75% in the fall of 2010. In the spring of 2010, the rate was 95%, compared with only 75% in the spring of 2011.

What happened? In a discussion with AuntMinnie.com, Petscavage said that a number of factors the software could not affect might have caused the drop. Email reminders to meet peer-review quotas (currently 4.2% of the annual number of studies interpreted) stopped being sent to remind delinquent radiologists to participate. The department also stopped publicly naming individuals who did not participate or meet their quotas.

It turned out that radiologists who weren't participating with the original system didn't participate with an integrated one.

"Obtaining a high level of participation is a cultural issue," she noted. "Automation did not impact motivation."

Ultimately, automating eRadPeer proved to be very helpful to the peer-review committee of the radiology department. The committee was able to more efficiently open cases in which the reviewing radiologist's interpretation differed with the report and to assess whether the score was appropriate. The system had a method to review the reports of fellows and residents, which is useful.

Finally, the automated system can help identify nonrandom report review selection, or the consistent declining of invitations to review until the name of a specific colleague appears.

"We're pleased that we automated peer review," Petscavage concluded. "We recommend that other departments consider it."