What's the average radiation dose of a CT scan performed in the U.S.? How do your exam protocols compare with the norm? If you don't know the answers to these questions, you're not alone. But don't worry: Help is coming in the form of the American College of Radiology's (ACR) Dose Index Registry.

The ACR established the Dose Index Registry in May 2011 with the goal of enabling imaging facilities to compare their CT doses to regional and national values. Last month, attendees at the Society for Imaging Informatics in Medicine (SIIM) annual meeting heard an update of what's been accomplished in the registry's first year of operation.

What's the verdict? Although early adopters of the registry have reported some challenges, they have also recognized its value as imaging facilities struggle to get a handle on the radiation dose problem.

Setting a benchmark

SIIM attendees were given a candid, firsthand account of participation in the program by researchers from the University of Washington Medical Center and Harborview Medical Center in Seattle. Their experiences highlight the importance of tracking dose as radiology comes under the spotlight with respect to both patient safety and imaging utilization.

For example, an imaging facility can implement dose reduction strategies internally, but how does it know how successfully it's reducing dose compared to similar facilities or on a regional, state, or national level?

Dose registries can provide such benchmarks. The ACR Dose Index Registry enables individual practices to monitor dose indices and compare practice patterns. A component of the National Radiology Data Registry, the dose registry collects and compares dose information using standard methods of data collection, such as DICOM structured reporting and the Integrating the Healthcare Enterprise (IHE) Radiation Exposure Monitoring (REM) profiles.

The registry's long-term goal is to establish national benchmarks that will enhance facilities' ability to track radiation dose reduction efforts over time. It is hoped that such benchmarks can be used to develop national standards.

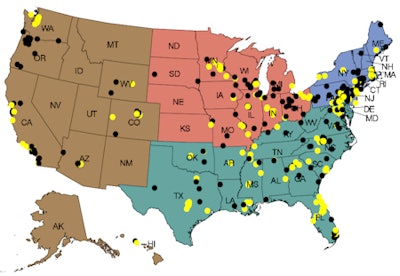

In the 12 months since the registry's launch, more than 450 facilities have registered, and 200 are active participants. Registrants represent a composite of U.S. imaging facilities, including academic hospitals, children's hospitals, urban and community hospitals, and freestanding imaging centers.

"Data from almost 2 million scans representing about 1 million exams have been logged in the registry; in recent months, the growth has been dramatic," said Richard Morin, PhD, an ACR fellow from the Mayo Clinic in Jacksonville, FL. A low and graduated fee structure has been implemented to encourage participation.

|

| Dose Index Registry facilities as of June 4, 2012. Yellow dots indicate facilities registered and contributing data. Black dots indicate facilities registered but not yet contributing. Image courtesy of the ACR. |

The data acquisition process is automatic. Dose data from a CT scanner are transmitted to ACR software using a DICOM structured report or third-party software that utilizes optical character recognition to pull the dose from a screen capture. After being anonymized, the data are transmitted based on time parameters set by the facility and uploaded to the ACR database.

Users configure their own criteria for comparison, and these criteria be changed at any time. Reports are published on a semiannual basis, although the ACR plans to give facilities electronic access in the future.

Users can select reports based on individual CT scanners, all scanners being utilized, or the type of exam. One type of report shows every exam reported, which Morin said is a fast and easy way to identify outliers and to determine how they occurred.

"Facilities may identify a small number of patients who received what appeared to be 10 times the average dose index for a noncontrast head CT," he explained. "What probably happened was that this patient had a perfusion contrast study added, but if a technologist does not close the first scan, the two are combined."

Creating standardized names for exam categories proved to be the biggest challenge. "Did you know that there are at least 1,400 different names for a head CT?" he asked "We didn't, until facilities began to register. Using the registry makes a very strong case for the need to use standardized terminology."

The ACR scrambled to develop software that would allow its users to categorize exam names into more generic terms for improved comparison. More refinement is still needed.

Another issue the ACR is working on is the need to differentiate exams that use iterative reconstruction software, which can significantly reduce radiation dose.

"The registry is still a work in progress," Morin said. "But in addition to being a powerful behavior modification tool for radiologists, it can also be a great quality and safety marketing tool for patients."

One participant's experience

The radiologists at University of Washington Medical Center and Harborview Medical Center, its affiliated level I trauma center, were among the first to join the registry. Dr. Jeffrey Robinson, acting assistant professor of radiology, said that the radiology departments of both institutions had put a lot of effort into dose monitoring for several years. The idea of being able to inexpensively automate the process and obtain benchmark data was very appealing.

But the process had its challenges, Robinson explained, some of which were associated with being early adopters of the registry.

The hospitals signed up in May and planned to install the software in June 2011. This timing was the first hurdle they faced. The radiology departments were in the process of consolidating their RIS software; radiology IT staff resources were stretched to the limit, even though only a few hours of IT technical time were ultimately needed.

The next challenge was coordinating the schedules of modality vendor technical support staff, the in-house radiology IT staff, and the ACR technical staff. ACR handles the remote software installation, Robinson explained, but it is necessary to do some configuration at the reporting sites.

"This is the nature of a busy world," he said. "Just plan some flexibility into your implementation schedule."

To transfer data, either a CT scanner or PACS hardware needs to be configured, and the server holding the ACR registry information needs to be integrated into the department's IT network. Two configurations are possible: one has each CT scanner transfer data directly to the ACR server, while the other has the scanners transmit data to the PACS, which then transmits the data to the ACR server.

"We chose the latter configuration because there are fewer connections," he said. "We also wanted to make life simpler for ourselves by not having to reconfigure the server every time we added or swapped out CT scanners."

The hospitals had a mix of new and older scanners, and the older ones did not support DICOM structured reporting. ACR's Triad optical character recognition software reads the information template of a CT scanner for dose information, so this was not a problem. However, what was surprising was that the hospitals' PACS did not know what to do with the DICOM structured report information sent from a new scanner.

"The PACS interpreted this information as a note that it placed in a comment field," Robinson said. "It didn't forward this information to the ACR Triad server. The solution was to use optical character recognition with the new CT scanners as well as the older ones."

Once implementation began, the Triad server crashed almost every day. It took some time to figure out what was happening: The hard drive was filling up too fast because the system had been programmed to send data from the entire CT scan. The problem was resolved when ACR advised that only the dose report needed to be sent, not the entire study. Once this adjustment was made, the server stopped crashing.

Robinson attributed the problem to being an early adopter. He presumed that ACR has since modified the installation instructions for its server.

While the organizations didn't have 1,400 terms for a head CT scan, they did have 19 different descriptions for a chest CT with contrast. Most exams had multiple names, which were acquired over time because the exam name is a free text field in the scanner. Even if specific exam names stopped being used, they couldn't be abolished. At Robinson's institutions, the PACS needed them to retrieve prior exams.

The biggest headache for early adopters may have been ACR's request in mid-December 2011 to map all names of the exams that had been submitted for the past five months to simpler categories. While Robinson agreed with Morin that this was needed, it wasn't easy. The data mapping tool provided was inefficient, and mapping challenges included difficulty in assigning body regions to specific series and overreporting dose for multipart exams.

"The timing of this request couldn't have been worse, right after the RSNA [meeting] and on the eve of and during the holidays," Robinson said. "This was time-consuming and tedious. We buckled down, with my SIIM co-presenters, medical physicist Kalpana Kanal, PhD, and Dr. Tracy Robinson, as well as our lead CT techs doing a lot of the work."

The first report back from the ACR arrived in February 2012, consisting of 94 pages of detailed data covering July through December 2011. It was apparent that all of the mapping issues he and his colleagues had encountered were experienced by others.

"We need to figure out what to do with all this information," he said. "We're looking forward to getting the second report and comparing the two. But I'm very excited."

"The ACR Dose Index Registry is definitely heading in the right direction and will [become more] valuable to its subscribers as more radiology departments register," he added. "In spite of the challenges we had as early adopters, we're really glad that we joined."

Click here for more information about ACR's National Radiology Data Registry.