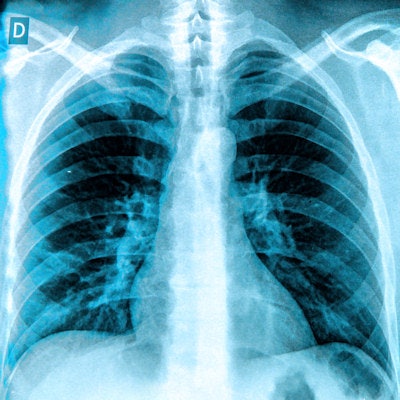

An artificial intelligence (AI) algorithm developed by Stanford University researchers can detect 14 types of medical conditions on chest x-ray images, and it's even able to diagnose pneumonia better than expert radiologists, according to a paper published November 14 on arXiv.org.

Their model, called CheXNet, is one of the first to benefit from a public dataset of chest x-ray images released by the U.S. National Institutes of Health (NIH) Clinical Center in September to stimulate development of deep-learning algorithms. That dataset of more than 100,000 frontal-view chest radiographs -- labeled with up to 14 possible pathologies -- was released along with an algorithm that could diagnose many of those pathologies. The NIH hoped the initial algorithm would inspire other researchers to advance the work.

Thanks to the NIH's ChestX-ray14 dataset, the Stanford team made rapid progress in developing CheXNet. After just a week of training, the deep-learning model was able to diagnose 10 of the 14 pathologies more accurately than the initial NIH algorithm, and within a month, CheXNet was better for all 14 pathologies.

What's more, it could also diagnose pneumonia more accurately than four Stanford radiologists, according to the team led by Pranav Rajpurkar and Jeremy Irvin of Stanford's Machine Learning Group.

Diagnostic challenges

A chest x-ray is currently the best available method for detecting pneumonia. Spotting pneumonia can be challenging, however, due to an often vague appearance and an overlap with other diagnoses. It may also mimic many other benign conditions, according to the Stanford group.

The researchers used the ChestX-ray14 dataset to train the 121-layer dense convolutional neural network. Of the 112,120 x-rays, 80% were used for training and 20% were reserved for validation of the model. The images were preprocessed prior to being input into the network, and the training data were also augmented with random horizontal flipping.

In addition to calculating a percentage probability of pneumonia, the model provides a "heat map" on the x-ray that shows the radiologist the areas of the image that are most indicative of pneumonia and other pathology.

To see how the algorithm would compare with radiologist interpretations, the researchers had four practicing academic radiologists independently annotate a subset of 420 images from the NIH dataset for possible indications of pneumonia. For the purposes of the study, the majority vote of the radiologists was considered to be the ground truth. Receiver operating characteristic (ROC) analysis showed that CheXNet had an area under the curve of 0.788.

"The sensitivity-specificity point for each radiologist and for the average lie below the ROC curve, signifying that CheXNet is able to detect pneumonia at a level matching or exceeding radiologists," the authors wrote.

ROC analysis also showed that the algorithm achieved the highest performance for all 14 pathologies among any research published so far from the NIH chest x-ray dataset, according to the group.

CheXNet has the potential to reduce the number of missed pneumonia cases and significantly accelerate workflow by showing radiologists where to look first, according to the researchers. They also hope it may be useful in areas of the world that might not have easy access to a radiologist.

In addition to the CheXNet work, Stanford's Machine Learning Group has been developing AI algorithms for diagnosing irregular heartbeat and mining electronic medical record data.