A new AI PET/CT tool can assess patient cancer risk, predict tumor response, and estimate survival, according to research findings delivered June 10 at the Society of Nuclear Medicine and Molecular Imaging (SNMMI) annual meeting in Toronto.

In his presentation, Kevin Leung, PhD, from Johns Hopkins University in Baltimore, MD, shared his team’s results, which demonstrated the AI approach’s ability to accurately detect six different cancer types on whole-body PET/CT scans.

“In addition to performing cancer prognosis, the approach provides a framework that will help improve patient outcomes and survival by identifying robust predictive biomarkers, characterizing tumor subtypes, and enabling the early detection and treatment of cancer,” he said in an SNMMI statement. “The approach may also assist in the early management of patients with advanced, end-stage disease by identifying appropriate treatment regimens and predicting response to therapies, such as radiopharmaceutical therapy.”

Automatic cancer detection and characterization can make way for early treatment and better patient outcomes. Leung said that most AI models that are built to detect cancer are developed on small to moderately sized datasets that “usually” cover a single malignancy or radiotracer.

“This represents a critical bottleneck in the current training and evaluation paradigm for AI applications in medical imaging and radiology,” Leung said.

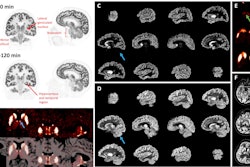

Leung and colleagues developed the AI tool using a deep transfer learning approach for fully automated, whole-body tumor segmentation and prognosis on PET/CT scans. The technique extracts radiomic features and whole-body imaging measures from the predicted tumor segmentations, allowing for quantification of molecular tumor burden and uptake across all cancer types.

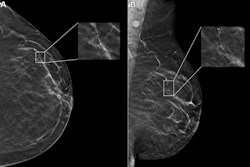

Illustrative examples of the predicted tumor segmentations by the deep transfer learning approach across six cancer types. Pretherapy and post-therapy scans are shown for breast cancer. Image and caption courtesy of SNMMI.

Illustrative examples of the predicted tumor segmentations by the deep transfer learning approach across six cancer types. Pretherapy and post-therapy scans are shown for breast cancer. Image and caption courtesy of SNMMI.

From there, the team used quantitative features and imaging measures to build predictive models to demonstrate prognostic value for risk stratification, survival estimation, and prediction of treatment response in patients with cancer.

The study included data from 611 FDG PET/CT scans of patients with lung cancer, melanoma, lymphoma, head and neck cancer, and breast cancer, as well as 408 PSMA PET/CT scans of prostate cancer patients.

The researchers reported that the approach could predict tumor segmentation with high accuracy among the included cancers and that it led to median true negative rate and negative predictive value of 1.00 across all patients.

| Performance of AI approach on voxel-wise tumor segmentation | ||||

|---|---|---|---|---|

| Measure |

Lung cancer |

Melanoma |

Lymphoma |

Prostate cancer |

| True positive rate |

0.75 |

0.85 |

0.87 |

0.75 |

| Positive predictive value |

0.92 |

0.76 |

0.87 |

0.76 |

| Dice similarity coefficient |

0.81 |

0.76 |

0.83 | 0.73 |

| False discovery rate |

0.08 |

0.24 |

0.13 |

0.24 |

The team also reported the following:

- The prognostic risk model for prostate cancer yielded an overall accuracy of 0.83 and an area under the receiver operating characteristic (AUROC) of 0.86.

- Patients classified as low-, intermediate- and high-risk had mean follow-up prostate-specific antigen (PSA) levels of 9.18 ng/mL, 26.92 ng/mL, and 727.46 ng/mL, and average PSA doubling times of 8.67, 8.18, and 4.81 months, respectively.

- Patients predicted as high-risk had a shorter median overall survival compared with low- or intermediate-risk patients (1.64 years vs. median not reached, p < 0.001).

- Patients predicted as intermediate-risk had a shorter median overall survival compared with low-risk patients (p < 0.05). The risk score for head and neck cancer was significantly tied to overall survival by univariable and multivariable analyses (p < 0.05).

- Predictive models for breast cancer predicted complete pathologic response using only pretherapy imaging measures and both pretherapy and post-therapy measures with overall accuracies of 0.72 and 0.84 and AUROC values of 0.72 and 0.76, respectively.

Leung said that generalizable, fully automated AI tools will play a key role in imaging centers in the future by assisting physicians in interpreting PET/CT scans of cancer patients.

He added that the deep-learning approach used in the study could lead to the discovery of important insights about the underlying biological processes of cancer tumors that may be currently understudied in large-scale patient populations.