BARCELONA - How computer-aided detection (CAD) software works in theory is one thing, and how it functions in practice is another, as radiologists who use CAD have come to appreciate. But the ways in which readers react to CAD prompts, a subject that has received scant attention, has important implications for both CAD design and reader training.

A case in point is a study presented Thursday at the Computer Assisted Radiology and Surgery (CARS) meeting by U.K. researchers aimed at assessing the dependability of CAD. The so-called HTA (Health Technology Assessment) study conducted by University College London and St. George's Hospital, London, evaluated the R2 ImageChecker 1000 (Hologic, Bedford, MA) to assess the accuracy of breast cancer lesion detection by the software.

The study included a total of 50 readers (including radiologists, radiographers, and breast clinicians) who evaluated 180 cases (including 60 breast cancers) with and without CAD. A standard analysis showed that CAD had no net impact on lesion detection. The readers were trained on the CAD software, and were told the study they were conducting was about CAD, but were not given detailed information about the implications of their choices, said lead investigator Dr. Eugenio Alberdi from City University London and St. George's.

In a subsequent analysis of the HTA data, a previous case study performed by the researchers used exploratory regression analysis to find a combination of positive and negative effects that varied as a function of reader sensitivity and case difficulty. That study also looked at what happened when readers were faced with cases where CAD failed to put the right prompt on the cancer.

"We found that the computer was biasing people's decisions," Alberdi said. In particular, that study found that an absence of prompts decreased readers' likelihood of recalling a case, whether true positive or true negative.

"We saw there were some disparate behaviors and we wanted to look in more detail at how the prompts were affecting people's decisions," he said.

This was no easy task, as the HTA analysis only looked at which cases were referred and what the CAD marks said, Alberdi explained. Some referrals may have been prompted by a suspicious lesion in another region of the breast, for example, unrelated to the CAD mark or lack thereof.

And the problem harkens to a basic problem of CAD analysis, in that CAD functions at the lesion level, while cases are referred at the patient level. Moreover, CAD's job is to notice features, not to evaluate them or offer advice on whether a case should be referred, he said.

"At a feature level, maybe we can get an idea of how CAD can help more than it does now," he said.

CAD is considered to have provided correct output when it prompts at least one of the areas of the mammogram where there is a likely cancer, even when it prompts areas where there is no cancer, or fails to prompt other areas where there is cancer.

Mining CAD data

To get a better idea of how CAD data were being used, the researchers decided to study the readers' original notes from the HTA study, looking for information on how the original referral decisions were made, which took one research team member six months to compile, Alberdi said. The notes contained information on each of the lesions marked by a reader for every case, and whether or not the feature was prompted by CAD. The notes also marked the degree of suspicion the reader assigned. For each feature marked by a reader, the notes indicated whether that feature had been prompted by CAD, whether each marked feature corresponded to a cancer or not, and the degree of suspicion for each detected lesion (1-5 scale, 1 meaning "not significant").

"We realized that these classifications were not completely satisfactory, but for practical reasons that's how we analyzed things," he said.

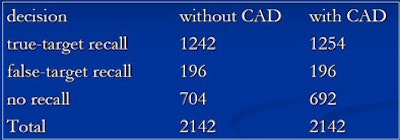

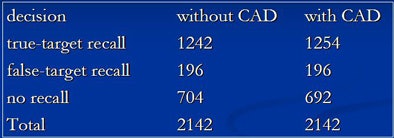

In all, Alberdi and colleagues extracted 5,839 reports for 59 of the 60 original cancer cases into an electronic database and provided a detailed analysis of these newly processed data. The analysis looked at true-target versus false-target recalls, i.e., did readers always recall a cancer because it was suspicious for cancer, or because they were prompted by CAD to evaluate a lesion they had dismissed? And what were the effects of CAD on readers' reactions to lesions? Were readers more likely to classify a lesion as suspicious if it was so prompted by CAD?

"The purpose of CAD is not to say 'this feature may be a cancer,' but instead 'look at this feature,' " Alberdi said.

A true-target recall was defined as an instance of the reader recalling the case and the reader marking the cancer lesion. A false-target recall was when the reader recalled the case for a lesion other than the cancer lesion. In all, 13.5% of recalls were false-target recalls, he said.

|

| A novel finding of the study was that 13.5% of recalls were false-target recalls, meaning the reader recalled the case for a lesion other than the cancer lesion. All data and charts courtesy of Dr. Eugenio Alberdi. |

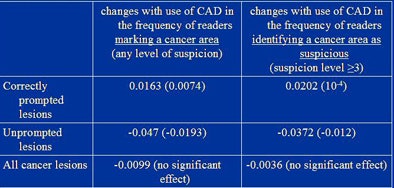

According to these criteria, CAD had both positive and negative effects on the readers, just as the earlier HTA study had suggested, Alberdi said. The new analysis found that the presence of a CAD mark increased the likelihood that the reader would mark a lesion, an intended effect of CAD, he said.

At the same time, the presence of a CAD mark also increased the likelihood that the reader would consider a lesion to be suspicious, an unintended effect. Finally, the lack of CAD prompts in an area made it more likely to be marked by a reader, he reported.

|

| The chart above shows difference in readers' reactions between CAD and non-CAD conditions in the same area of the breast for the identification of cancerous lesions. (Figures in parentheses indicate 95% one-sided confidence bound on change in probability.) |

|

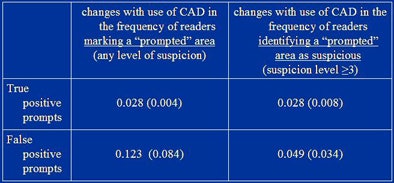

| Readers' reactions to areas prompted by CAD show that CAD-prompted markings are more common for false-positive rather than true-positive prompts. (Figures in parentheses indicate a 95% one-sided confidence bound on change in probability.) |

"When CAD marks are placed in noncancer areas, people are more likely to recall [patients] when CAD is being used than when it's not being used," he said. "And the presence of prompts in an area makes people more suspicious of that area."

The results also suggested that the higher the readers' ability to distinguish between cancers and noncancers without CAD, the less likely they were to alter their responses based on incorrect CAD marks. In response, a member of the audience suggested that it might be desirable to have adaptable CAD settings based on reader skill levels.

A radiologist in the audience who used breast CAD regularly questioned the notion that CAD should not serve to heighten a reader's suspicion that a marked lesion is malignant. "That is exactly what CAD is for," he said.

By Eric Barnes

AuntMinnie.com staff writer

June 26, 2008

Related Reading

Breast MRI CAD doesn't improve accuracy due to poor DCIS detection, February 18, 2008

Study finds that CAD improves mammography's sensitivity, February 13, 2008

Mammo CAD results show reproducibility in serial exams, January 10, 2008

CAD vs. radiologist second reads: What's better for screening mammograms? November 16, 2007

Copyright © 2008 AuntMinnie.com