A large language model called Axpert demonstrates potential for automatically labeling necrotizing enterocolitis on infant abdominal x-ray (AXR) reports, according to a group at the University of Michigan.

The model may be deployed locally to preserve data privacy and serve as a framework for other imaging modalities and diseases in children, noted lead author Yufeng Zhang, PhD, and colleagues.

“This tool not only promises to reduce the workload of medical professionals but also improves the accuracy of diagnosing severe conditions in young patients, showcasing the potential of AI in enhancing pediatric healthcare,” the group wrote. The study was published February 10 in JAMA Open.

Necrotizing enterocolitis (NEC) in neonates is a devastating disease, with mortality rates ranging up to 30%. The disease can lead to sepsis caused by an infection from a hole in the bowel. Given its severe outcomes, there is a pressing need for sensitive techniques capable of accurately identifying NEC, according to the authors.

Despite several well-developed report labeling methods for chest x-rays, similar tools for AXRs are notably absent, they noted. Moreover, annotating AXRs for NEC requires substantial domain expertise and is time-consuming and costly.

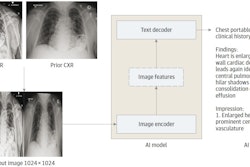

Thus, the researchers developed Axpert, a privacy-preserving large language model that automatically extracts labels from AXR reports by identifying critical features of NEC, such as pneumatosis, portal venous gas, and free air.

The study included AXR reports acquired in the neonatal intensive care unit at C.S. Mott Children’s Hospital in Ann Arbor between January 2016, and March 2024. To create a dataset for algorithm training and testing, two clinicians manually labeled 2,498 reports as positive, negative, or uncertain for NEC. Of these, 2,061 reports or their subsets were used for model training (64%) and development (18%), while the remaining 437 (18%) serve as a basis for evaluating the model.

The researchers trained Axpert to detect NEC by classifying its subtypes, either pneumatosis, portal venous gas, or free air, in two formats: a fine-tuned 7-B Gemma model, and a distilled version employing a BERT-based model derived from the Gemma model to improve its inference and speed. To evaluate the model, the group compared its performance to BERT (Bidirectional Encoder Representations from Transformers) models, specifically BlueBERT, which was also fine-tuned on the datasets.

Testing showed that Axpert outperformed baseline BERT models on all metrics. Specifically, Gemma-7B improved upon BlueBERT by 132% in F1 scores for detecting NEC-positive samples, while the distilled BERT surpassed all expert-trained baseline BERT models.

“Axpert demonstrates potential to reduce human labeling efforts while maintaining high accuracy in automating NEC diagnosis with AXR, offering precise image labeling capabilities,” the group wrote.

The authors added that to their knowledge, this study represents the first attempt to automatically extract labels from abdominal radiology reports. They noted that the American College of Radiology (ACR) recently issued a white paper emphasizing that the lack of AI tools for pediatric radiology is a health equity issue.

Ultimately, the study explores the potential of large language models in the analysis of radiology reports and demonstrates a practical solution for deploying an advanced model, according to the group. The researchers noted that the source code for Axpert is available at github.com/kayvanlabs/Axpert.

“Future work will include external training and validation across multiple children’s hospitals and, if necessary, the incorporation of [retrieval-augmented generation] to enhance performance,” the group concluded.

The full study is available here.